A Mobile Computing System for Testing Wearable Augmented Reality User Interface Design

Tom Sephton, Jon Black, Gaber El Naggar, Anthony Fong

Multimedia Graduate Program, California State University, Hayward

tom@funhousefilms.com

jon@ita.sel.sony.comgaber@ibm.net

afong@ea.com

Abstract

This paper describes a mobile augmented reality system developed to test user interface designs appropriate for future wearable computers. As the graphics, sound and processing performance of wearable computer systems improve to meet and exceed current desktop standards, new types of user interfaces become possible. Data can be located in real world space and navigated to by physically walking up to it. Interaction with a wearable computer can be modeled on familiar activities like conversation. The system described in this paper enables a wearer to walk in ordinary open outdoor environments and interact with 3D objects and characters seen through a semi-transparent head mounted display and heard in 3D sound. The system uses low cost backpack worn desktop computer technology. The primary interaction is through speaking with 3D animated characters which ask questions and respond to the wearer's answers and location. This system is enabling current testing of user interface concepts that can make future wearable computers easy for the general public to use.

1. Introduction

Wearable computers present new challenges in the design of human computer interfaces. Common input devices like mouse and keyboard are very awkward for a mobile system. The desktop metaphor in most graphical user interfaces is intuitive in an office setting but not in a highly mobile context. Familiar human interaction and navigation methods can be applied as a replacement for the desktop metaphor and input devices. Navigation to access computer data can be accomplished by literally walking around in the real world. This can be represented as three dimensional objects for abstract data (3D charts and graphs). For data with a real world context like architectural models, the location of the nearest post office, or restaurant reviews posted by customers at the front door [1] the navigation is physically obvious. Walking up to something of interest and looking at it makes a very intuitive navigation method.

While point and click was a major advance in easy interaction with common desktop computers, wearable computers would benefit from a means of interaction not tied to a keyboard or a flat screen. Reach out and touch, tactile interactions are attractive, but currently require cumbersome or expensive hardware. Alternatively, interaction with the computer can be modeled on human conversation. Voice recognition enables talking interfaces that are well suited to the mobile, hands free capabilities of wearable computers. While natural language recognition techniques are not yet able to provide a true conversational interface, well scripted, engaging question and answer interactions can provide control as sophisticated as the menu options in most graphical user interfaces.

This paper presents a Mobile Augmented Reality System sufficient to test these interface concepts. The system permits real time overlay of 3D objects and 3D sounds located in real world space as the user walks about outdoors. Our current test platform is a backpack computer system with a head mounted display, differential GPS position tracking, passive magnetic orientation tracking, hardware accelerated 3D animation, 3D sound, voice recognition, and a wireless Internet connection.

2. Interface Design Criteria

The design and implementation of the system arose from a set of goals describing what the user experience should be. The overall goal was to create a user interface appropriate for wearable computers targeted to use by the general public. Interface goals for professional users would differ in many respects but some principles apply to both groups.

3. System Design Criteria

The interface design goals to be tested imply the essential components of the system. To provide a sense of immersion a head mounted display was a necessary choice. The system had to rapidly adapt to the wearer's location and orientation updating the display and audio according to where the user walks and looks. To create a believable conversational interaction with an animated character, voice recognition, fast graphics, and sound output capability were essential. In general the design goals and end result conform to the definition of wearable computers put forth by Korteum, Segall, and Bauer [3] including hands-free or one-handed operation, mobility, augmented-reality, and sensors and perception. The design criteria applied were the following:

4. Hardware Implementation

After working on our own head mounted display and testing the M-1 and Micro-optical units we selected a 1998 model Sony Glasstron for its wide field of view, color display, relatively low cost, and compatibility with users wearing eyeglasses. The headphones built into the Glasstron are also adequate for playback of 3D audio from the computer. A noise canceling microphone on a flexible arm has been attached to the left headphone support of the Glasstron for placement near the user's mouth.

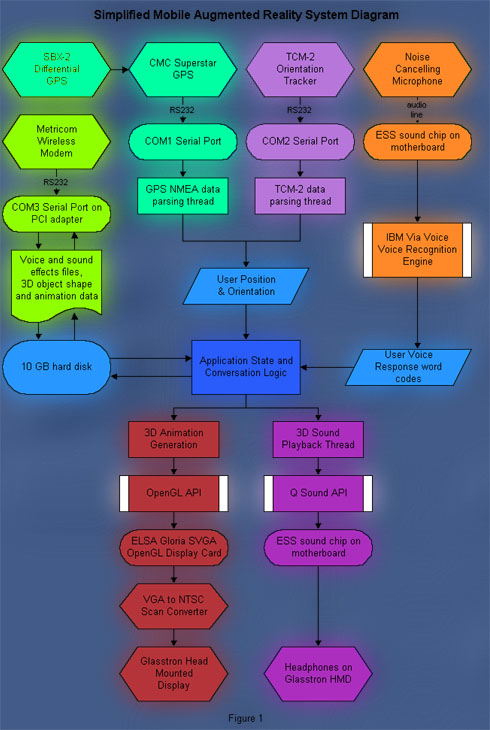

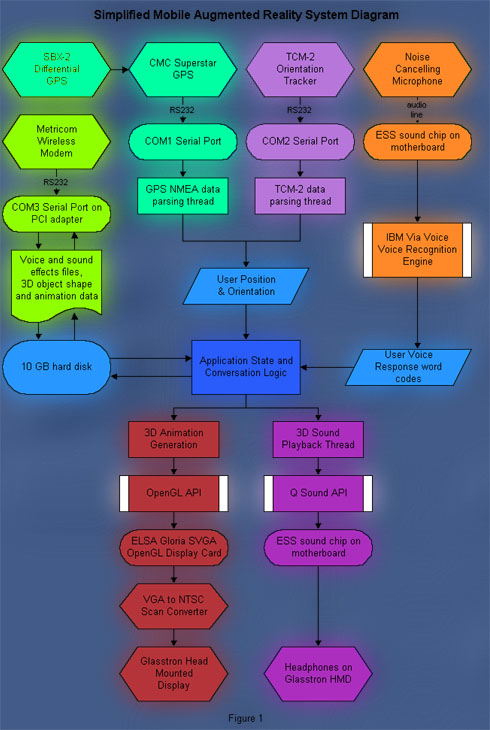

The high priority we gave to real time 3D augmented reality graphics and sound caused us to chose a test system that is essentially a mini desktop computer mounted on a backpack frame. Fast CPU processing and hardware accelerated 3D graphics were essential. These were expensive to obtain in a laptop or notebook computer and unavailable in a wearable at the time the hardware was assembled (January/February 1999). The core computer system uses a single 400MHz Intel Pentium II CPU on a Chaintech 6BSA micro ATX motherboard with 128MB of RAM. The motherboard has built in ESS Maestro sound chips, AGP2 and PCI slots and two com ports. A 10GB Seagate Medalist hard drive provides an excess in storage capacity. See Figure 1 for a diagram of essential hardware and software components.

An ELSA Gloria Synergy AGP video display card was chosen for strong 3D hardware acceleration performance in a low cost card. The card has performed well with simple characters lacking texture maps. The built in NTSC video output capability unfortunately cuts the performance drastically and is unusable. We use an outboard Personal Scan Converter with power modifications recommended by Steve Mann [5] to convert the SVGA output to NTSC video for the Sony Glasstron.

User location inputs to the system come from a Canadian Marconi Starboard GPS unit with a CSI SBX-2 unit that reads differential corrections from US Coast Guard transmitters along the West Coast. Both GPS boards are mounted inside the CPU casing. The antennas are mounted at the top of the backpack frame. Operating within the system these units provide one GPS update per second with an average radius of error in short term repeatability tests on the order of two meters. This is barely adequate to update the motion of a person walking at medium pace. These were the lowest cost differential capable GPS units we could locate at the time (November 1998).

The user's head orientation is input from a magnetic head tracking unit mounted into the crown of a bicycle helmet worn by the user. The bicycle helmet was chosen after preliminary testing to stabilize the Glasstron and head tracker while walking. The head tracker is a Precision Navigation TCM-2 recommended by a researcher also testing outdoor wearable augmented reality applications [6].

A Metricom wireless modem was chosen as a reliable and cost effective mobile networking connection. This unit has the strong disadvantage that the Metricom network is only available in a few metropolitan areas including our own. Despite this, the Metricom modem is adequate for local testing.

The system is inefficient in it's use of power. The small desktop computer system draws quite a lot of power, especially on boot up. The built in 120VAC power supply is still being used to provide +- 12V, +-5V, and 3.3V to the system and added components. The whole system is being run from a heavy 12V battery that goes through an inverter to the computer power supply. This inefficient power arrangement needs to be redone.

5. Software Implementation

The mobile augmented reality system is running under the Windows NT 4.0 operating system. Windows NT was chosen because the API's required for 3D graphics display, 3D sound playback and the voice recognition engine will run in that operating system. These APIs will also run in Windows 95 and 98 but Windows NT supported our multithreaded applications more robustly and ran more quickly. The code is written in C and C++ for flexibility, compatibility, and processing efficiency.

OpenGL was chosen as the 3D graphics API because it is a cross platform industry standard, runs efficiently, is widely supported by 3D acceleration hardware and one member of our team had slight prior experience coding animation with it. OpenGL is well suited to real time 3D character animation programming which is a major feature of the interface concepts we are testing. The choice of OpenGL did limit our computer hardware options as the only 3D acceleration chipset available in laptop computers at the time did not fully support the API.

Q Sound was chosen as the 3D sound engine and API. The Aureal hardware accelerated 3D sound cards and API were experimented with first, and were marginally more realistic in generating the sensation of sound sources circling around the user's head. The Aureal 3d sound drivers require Microsoft Direct Sound 3D which was not implemented in widows NT 4.0. We therefore chose Q Sound as the 3D sound engine and API because it would run under Windows NT. It has proven to be flexible and robust in coding. We are testing the effectiveness with users.

IBM's Via Voice was chosen as the voice recognition engine because it is well established, supports multiple languages, and is very inexpensive when used for research purposes. Most voice recognizers require training to specific users. We are testing a system for usability with a wide range of wearers, so lengthy voice training with each test subject is impractical. Via Voice has a command mode that is fairly effective at recognizing common words spoken by a wide range of users. Early testing has verified the expected difficulty in recognizing certain accents or commands spoken in a stream of other words or noise. We are working to accommodate these limitations through scripting appropriate hints from the virtual characters when they can not recognize the user's voice response.

6. The Augmented Reality Interface

The interface designs we are currently testing involve a 3D animated cartoon bee character who functions as a guide and mentor. She sets the user on a simple task to find some virtual objects located in an open outdoor space. The objects are located relative to where the application is started by making the first GPS reading ground zero. From there the wearer can walk freely to any of the objects. In this application they are rendered as cartoonish UFO's. The bee starts a conversation with the wearer by flying into the field of view and asking questions. The bee requests that the user "Go find those UFO's" and the task begins. The UFO's are a bit secretive and fly away when the wearer walks up close. When each UFO has bee found, the bee asks the wearer to come and be tested for infection with alien ideas. Of course the wearer has been up close to UFO's so alien influences are detected. This initiates a final conversation with the bee that finishes the game.

Our mobile augmented reality system suffers from the same problems with accurate registration that plague most augmented reality systems. These problems are the focus of intense research by several groups working in augmented reality. Errors inherent in the GPS and head tracking systems make perfect alignment with the real world impossible in our system. We have chosen to design around the problem for the time being. All 3D objects in the outdoor environment should look like they would naturally fly or float. For flying saucers as objects this appears natural and appropriate. Each is given a sinusoidal 'floating around' behavior that changes position and orientation slightly on all axes each frame. The intended motion overcomes the perception of unreality that would be caused by errors in the tracking systems. Likewise the 3D animated characters are all designed to naturally fly. Hence the choice of a bee as a central character. The intentional and lifelike variation in flying and hovering motions overcomes the perception of errors in the tracking system. The wearer focuses attention on interaction with the characters and objects rather than the weaknesses of the system.

7. Initial User Experiences

Our mobile augmented reality system was developed as a test platform for the interface concepts described in this paper. We are in the process of conducting user trials to test the effectiveness of various elements of these interfaces by measuring task completion and user reactions with different features of the interface enabled. The interface application described in the previous section serves as a basis for one of these tests.

In the bee and UFOs application the bee can either guide the wearer to the UFOs or wait for the wearer to find them independently. We evaluate the effectiveness of the 3D character as a guide by comparing the time to find all UFOs between different test subjects randomly chosen to play the game with or without being led to the objects. Each test subject reads the following simple instructions:

"After the application starts, you will be given instructions by an animated, 3D character. At certain points during the application, you will be asked questions by the character. Respond to these questions with Yes or No. The application will be finished when you have completed the tasks that the character has asked you to do."

The test subject puts on the system as consistently as different body types allow. The person administering the test then starts a version of the application including or excluding guidance from the bee. The test administrator is unaware of which icon selecting an application has guidance and which does not and cannot see what the user sees. The test administrator then records time to completion of the task without intervening in the test. Only a small number of subjects have been tested so no conclusions can be drawn at this time. Our experience with the system leads us to expect that guidance from point to point by a character can be effective particularly as the bee emanates a buzzing sound that that makes her easy to locate by 3D sound when outside the field of view. Only further testing can offer a rigorous answer to this question.

Another test application, originally designed for young children, places colored shapes around the user that each can emanate musical sounds. The user can walk up and trigger the shapes to switch playing music from one to the next. This application has been modified to test the effectiveness of 3D audio for location cueing in an outdoor augmented reality environment. The Q Sound engine can be variously instructed to render object located sounds in full 3D with head transfer functions enabled, or in plain stereo with no head transfer functions, or in mono with no audio direction cueing except distance as the wearer walks closer to the object. Different test subjects are evaluated in their ability to find the four objects with any of the three levels of audio cueing. Each test subject is given the following instructions to read:

"After the application starts, you will need to find four objects: a green cube, a yellow dodecahedron, a blue tetrahedron, and a red sphere. Each object is animated and is capable of playing music. In order for an object to be "found," you must walk to the object until it is close to you. When you get close to an object you will hear a change in sound and you will see a change in the object's animation. Walk to each object until all four are found. After you have found all objects, a new character will appear and congratulate you."

Again the test administrator selects and notes one of three choices with an unknown 3D audio setting. A generated log file records the test subject's time to trigger each object. Here again the number of test subjects is too small to come to a conclusion at this stage. Results from other investigators [7] and our experience with this system indicates that both stereo and full 3D audio cueing can be very helpful in locating virtual objects outside the field of view. We have been able to walk through both applications with the visual turned off and spatial audio only as a guide.

8. Further and Future Work

The authors are currently developing a new immersive augmented reality game that facilitates direct usability testing of more of our interface concepts. A user interacts with 3D animated characters and objects that make musical sounds. These objects appear as if located in real world space. The user finds the objects by using 3D sound cues, direct observation, and following an animated guide character while walking around in any open outdoor location. Voice commands the user issues to characters enable the user to assemble selected musical objects into harmonious ensembles. The guide character is the user's interface to the system by engaging in conversation. The guide character teaches new users how to assemble musical objects, then lets the user explore and create, offering help on request. Musical object data can be downloaded as text and sound files from Internet servers. Through this game we are testing real world metaphors for wearable computer interfaces based on walking, looking, listening, and talking.

9. Conclusions

This paper has presented a set of interface design goals for intuitive interaction with wearable augmented reality computers. A low cost test system has been built from design criteria arising from those interface goals. The system and software are enabling us to propose real world metaphors for human computer interaction and test the efficacy of specific design choices that arise from those metaphors. We have begun testing of some of the interface design principles. The broader concepts of conversational interfaces with animated characters and navigation by walking and looking still need to be tested. Manipulation of data through manipulating 3D objects in real world space can also be tested with this system. We also would like to test user acceptance in general of computer systems that seem more alive and present in the real world.

The authors believe that by meeting the challenge of providing new, more intuitive interfaces, wearable computer technology can lead the way to a more natural interaction between humans and machines. Rather than require the humans to become computer literate, we wish to make the computers more human literate. The introduction of wearable computers offers a special opportunity to change established expectations about how human computer interfaces should be designed. Wearable computers require a different set of interfaces from those developed for the desktop. The form that these interfaces should take needs to be explored while the technology is still early in development.

10. Acknowledgements

The authors would like to thank our faculty adviser Phil Hofstetter for his support and encouragement and Dr. Bruce Thomas of the University of South Australia for his valuable advice.

11. References

[1] Jun Rekimoto, Yuji Ayatsuka, Kazuteru Hayashi

Augment-able Reality: Situated Communication through

Physical and Digital Spaces.

In Proc. Of the second international symposium on wearable

computers (ISWC '98), 1998

[2] Thad Starner, Bernt Schiele, Alex Pentland

Visual Contextual Awareness in Wearable Computing.

In Proc. Of the second international symposium on wearable

computers (ISWC '98), 1998

[3] Gerd Kortuem, Zary Segall, Martin Bauer

Context-Aware, Adaptive Wearable Computers as Remote

Interfaces to 'Intelligent' Environments

In Proc. Of the second international symposium on wearable

computers (ISWC '98), 1998

[4] Jim Spohrer

WorldBoard, What Comes After the WWW?

http://trp.research.apple.com/events/ISITalk062097/Parts/WorldBoard/default.html

[5] Steve Mann

"wearhow.html" How to build a version of

`WearComp6'.

http://wearcam.org/wearhow/index.html

Circuit Cellar INK, Issue 95, feature article, June 1998,

8 pages: cover + pages 18 to 24

[6] Bruce Thomas, Victor Demczuk, Wayne Piekarski, David

Hepworth, and Bernard Gunther

A Wearable Computer System with Augmented Reality to

Support Terrestrial Navigation.

In Proc. Of the second international symposium on wearable

computers (ISWC '98), 1998

[7] M. Billinghurst, J. Bowskil, M. Jessop, J. Morphett

A Wearable Spatial Conferencing Space.

In Proc. Of the second international symposium on wearable

computers (ISWC '98), 1998

| Tell us what you think! |